Update: Well, it looks like some internet stranger has not only found this post, but they posted it on Hacker News! As it would turn out, actual humans are reading this! It should be noted, however, that this post got absolutely roasted. And righteously so. I drafted this post in a dreadful manner, then let ChatGPT take a few swings at it. So, it is more than fair that I’d be called out for such clankerism. With that out of the way, I am folding in the feedback from that post, and have rewritten much of this post, since I am no longer shouting into the void.

If you dont want to read my mad ramblings, go straight to the Ansible Playbook for Running WordPress from RAM (On My GitHub).

Background

Over the heap of absolutely terrible projects I have had to endure in my career, and there have been many, absolutely nothing has tried my patience quite like WordPress sites that I would inherit technical custody of from other agencies. The agency for which I was the seniormost technical talent did a lot of enterprise WordPress and Magento. A client would come to us, hand us their site, and we would have to onboard it to new hosting. Of the 100+ WordPress sites that I have had to keep running over the last decade or so, at least 80 of them, right off the bat, would come to us with dozens of plugins that havent seen an update since they were installed. Bloated pagebuilder sites, unoptimized images, huge MySQL databases that badly needed to be cleaned up, and all of this running on servers that lacked optimization. The larger enterprise sites often had integrations with backoffice systems that were support nightmares (SAP, warehouse management systems you’ve never heard of, and one site that had to talk to an ERP that was hosted on an AS400 system). For business reasons, we had to keep these sites running until new sites could be built, and for those of you who haven’t worked with enterprise stuff, these redesign projects can take a long time. Since we are talking enterprise class WordPress sites, these projects would sometimes take over a year.

As I’ve gone about the challenge of working with these sites, I have learned a lot about performance turning WordPress from the lens of being a Linux sysadmin first and WordPress “dev” second. WordPress itself can be made to run fast, and there are plugins that can get you there, but real speed comes from tuning the underlying server. No amount of caching plugins will make a slow site fast. You have to have a well tuned server to run on.

I don’t work with WordPress professionally in my present role, but one day I was either reading something, or talking to someone, and the thought dawned on me “I wonder if you can just make WordPress run from RAM? Surely it will be faster because you skip the disk…” Ignoring all the obvious questions around persistence and the volatility of RAM, I decided to give it a try. I did this out of my own curiosity, and it tickled my ADHD the right way as to make this my absolute obsession for the next few nights and weekends.

Why Should I Run WordPress From RAM?

TL;DR: Because latency is theft

Running the entire WordPress stack from RAM eliminates every source of disk I/O latency during live requests. There are no filesystem lookups, no inode traversal, no fsync stalls, no trips to the disk. PHP, MySQL, and the web server all operate on memory resident data. This leads to faster time to first byte, consistent performance under load, and no reliance on disk caches or shared storage.

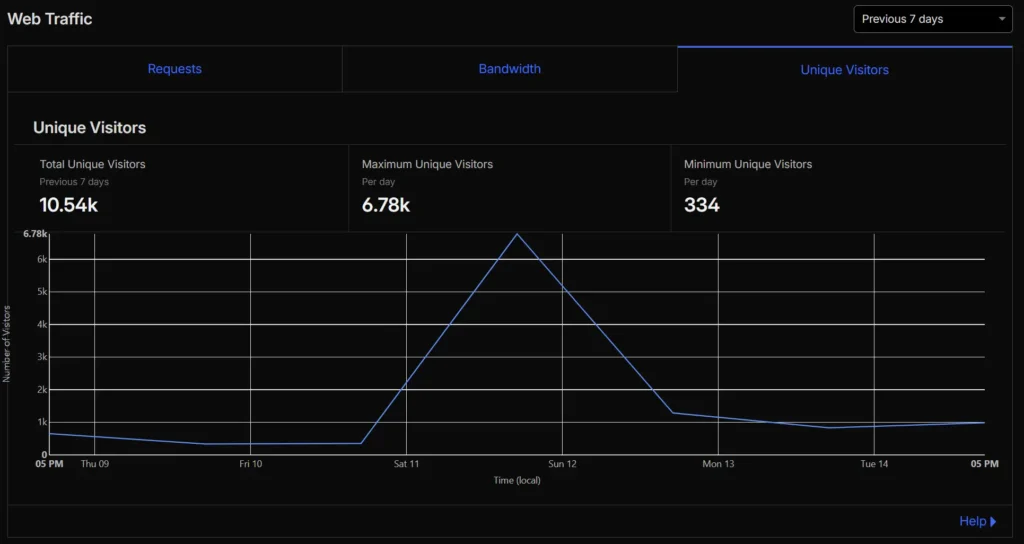

Thanks to a random reader of this post sharing this on Y Combinator’s Hacker News forum, I now have some data to show just how well this performs under a load of real users hitting my site!

Server Performance

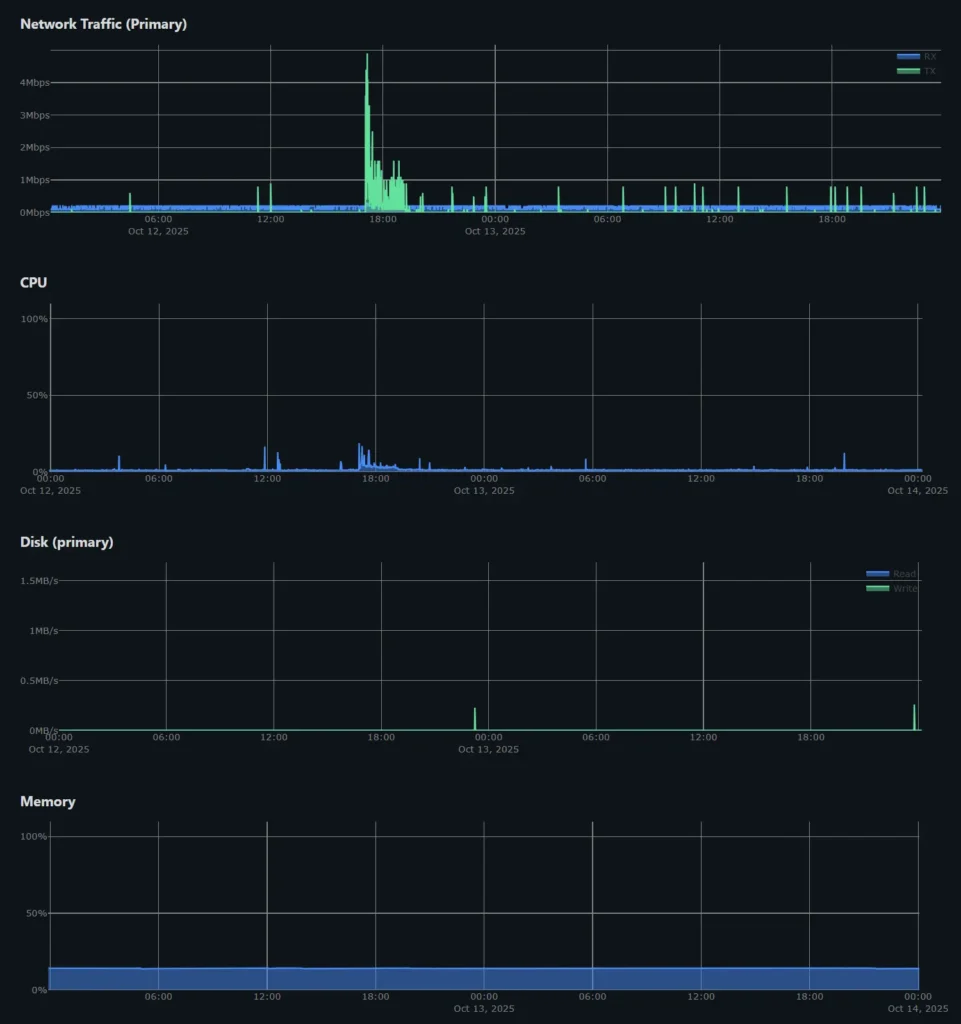

As you’ll see in the chart below, just before and after 18:00 GMT, time is when the Hacker News post gave my site an internet hug and my network I/O spiked. CPU peaked at 18%, RAM utilization was flat before, during and after the traffic event, and not a single peep from the block storage device until almost 2 hours later, and that peep was probably just the system writing something to /var/log/*

Looking at the Cloudflare logs, and knowing that the bulk of my hits came in right away, it’s safe to say that I was seeing somewhere around 2-4k unique visitors per hour. It is important to note that I am using a CDN out front since this is a single VM that is running this stack, but because the geographic distribution of my traffic was very diverse, there was a lot of origin pulls to warm up Cloudflare’s cachees at the edge, which I currently have set to defaults, and I’m only running on the free tier.

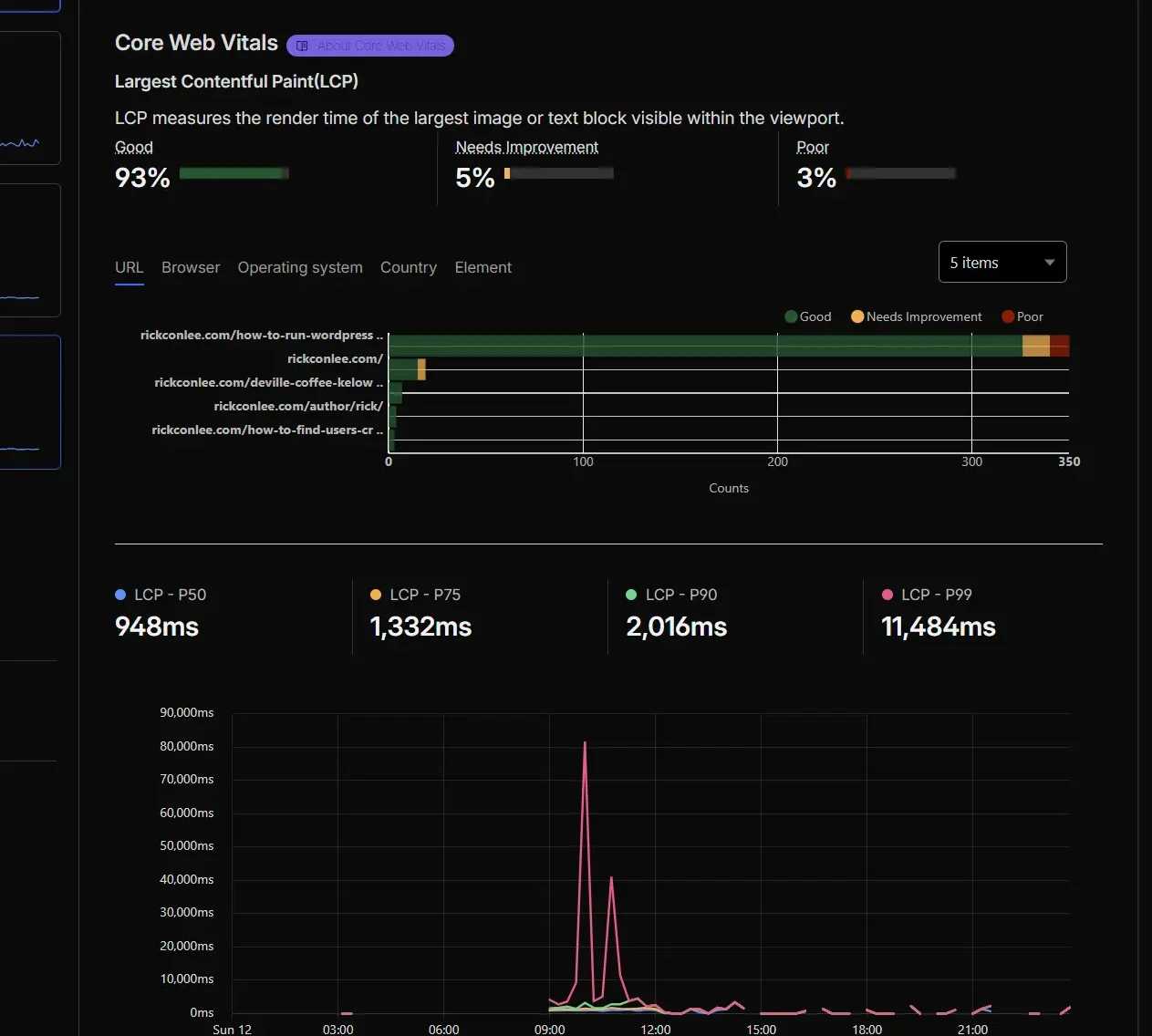

The core vitals tell a promising story. For the period that I was under the heaviest load, only 3% of the traffic sampled was considered poor for largest contentful paint (LCP). 93% of traffic sampled fell below google’s reccomended 2.5 second threshold. Interestingly, Cloudflare wasn’t doing all the work, I was seeing sub second means while the bulk of that traffic was still going back to my origin due to my caching header being cache-status: DYNAMIC.

My VM specs are as follows:

- 8gb of RAM (DDR5 according to the host)

- 2 Cores, on an AMD EPYC

- I’m on a shared VM host

Overview Of The Tune

Here are more details about the stack itself. As mentioned previously, this is a middle-of-the-road VM from a commodity hosting provider (Not one of the hyperscalers like AWS/GCP/Azure).

RAMDisk-backed WordPress

The WordPress root is mounted in a tmpfs RAMDisk, created at provision time by Ansible.

Traditional disk I/O, whether it’s SATA SSD’s, NVMe, whatever, adds latency to every request. Even if a file is cached by the OS in memory, every request still has to go through the full POSIX filesystem path resolution process. That means the kernel walks through each directory in the path, checks ownership and permissions at every level, and verifies access to the final file. These checks involve multiple syscalls like stat(), access(), and open(). They might be fast, but they are not free.

On a traditional disk-backed filesystem, even when the file contents are cached, the metadata might not be. That means extra I/O just to verify the file is readable or executable. And in high concurrency scenarios, that metadata can get evicted or fragmented. That adds some jitter that you can’t tune away. A tmpfs mount holds both file data and metadata in RAM. Every permission check and inode traversal resolves from memory with no disk hit, no controller latency, and no I/O scheduler in the path. Here, we are removing entire classes of overhead.

At boot, we pull a known-good tarball from object storage like R2 or S3 and extracts it into /mnt/wordpress, which exists that tmpfs mount in the RAMDisk. The NGINX root is pointed straight at that RAMDisk as well, for the static files. If the server crashes or reboots, it just pulls everything in again from object storage.

Persistence is handled separately. You can run a scheduled sync job that dumps the database and files into object storage so you can pull the latest known good copy on boot.

RAMDisk-Backed MariaDB

Yes, the database runs in RAM too. No disk writes. No journaling. No recovery logs. Just pure transient speed.

MariaDB is configured to store tables, indexes, logs, and temp files on it’s own tmpfs volume, mounted at /mnt/mariadb.

That means:

- All reads and writes happen directly in memory

- No disk I/O during queries or transactions

- Temp tables never spill to disk

Indexes and joins stay hot without waiting on the buffer pool.

Persistence is addressed in the previous section.

Debian 12 on AMD EPYC (Or Xeon)

Debian 12 gives us a clean, stable, modern base with predictable behavior and long-term support. The default packages aren’t bleeding edge, which is good. We don’t want a distro trying to be clever, we’re handling cleverness ourselves in Ansible.

Running on AMD EPYC is a deliberate choice. EPYC offers:

- High memory bandwidth which is critical for RAM-based hosting.

- Large L3 caches, which is excellent for high-frequency page builds.

Intel is fine, but EPYC hits that sweet spot for serious throughput without the thermal issues under load.

NGINX Manually Compiled

When I was working on this, the package in the Debian repository didnt have a build that came built to do QUIC, so I had to manually compile it from source. The process looks like this:

- Pull source from the

nginx-quichebranch (Cloudflare’s HTTP/3 and QUIC implementation) - Compile with selected modules, including:

- Brotli for static asset compression. Smaller than gzip, faster to serve.

- ngx_devel_kit and ngx_http_lua_module for inlining CSS/JS, manipulating headers, or injecting logic without touching PHP.

- Strip out unneeded modules like

mail,stream, andautoindex. This keeps the binary small, focused, and less vulnerable.

The result is a lightweight, high-performance binary tuned for:

- Serving static files directly from RAM

- Fast(er) TLS handshakes over HTTP/3. Despite using Cloudflare’s HTTP/3 at the edge, I didnt want the protocol getting downgraded when doing origin pulls, so I wanted HTTP/3 all the way down the path.

We override the default systemd unit to point to /usr/local/nginx/sbin/nginx, so package upgrades do not overwrite the build.

The Ansible Playbook Itself

Here’s the order of what the Ansible playbook is doing when it runs:

- Installs dependencies

- Downloads NGINX and module source code

- Compiles everything with your desired flags

- Writes

nginx.conf,mime.types,fastcgi_paramsand the Lua logic file - Mounts

/mnt/wordpressastmpfs - Extracts the tarball from object storage into RAM

- Configures PHP-FPM and MariaDB

- Makes MariaDB run from RAM in its own RAMDisk, also in

/mnt/mariadb - Imports the latest .sql file from object storage

- Places the systemd unit overrides

How To Install This Stack

Here are the steps to configure the Ansible Playbook that builds out the configuration.

Before you do anything, go out and provision a new VM at your cloud hosting provider. I recommend anything with 8gb of ram, and 2 vCPU’s.

1.) Clone the git repository. If you are running git from the command line, run:

[email protected]:rickconlee/wordpress-from-ram.gitTweak these files to work with your fresh server:

wordpress-from-ram/inventory.ini-template

wordpress-from-ram/site.yml-template Fill out the “change me” or the <your_var_here> lines in these files to match your setup.

Run the following command:

$ ansible-playbook -i inventory.ini site.ymlAfter about 10 minutes, this will install the entire stack with a fresh wordpress install, and the whole thing is running from RAM. If this is a reboot, Ansible will skip most of the steps and just run the dump + haul process from object storage, which should only take 1-2 minutes depending on the size of the downloads. For me, a rebooted “reprovision” takes about 1min 35sec.